“AI” Hype as Pretext for Labor Misclassification

Beware the risks of using shiny "AI" sleight-of-hand to simply re-define labor and pay it less.

As I’ve written in these pages before, I think a great deal of the “AI writer” discourse fundamentally misunderstands the risks of this technology for the so-called creative classes. I recommend reading my previous thoughts on this but the tl;dr is that AI, such that it is, cannot and will not any time soon do any high-level writing. With an understanding that the “high-level” qualifier in this statement has a touch of No True Scotsman, the limits of the technology beyond stale—though admittedly impressive—genre pastiche are obvious. The risk that studio execs, news-outlet-owning hedge funds and other suits may try to use this tech is a separate, but equally urgent matter. I don’t think it’s worth ceding the point that “AI” can actually do high-level writing for the simple fact that (A) it’s not true, and truth matters and (B) I think it misunderstands the very real risk to labor presented by “AI”—which, I will argue in this post, is far more of an ontological trick than a real shift in technological capability.

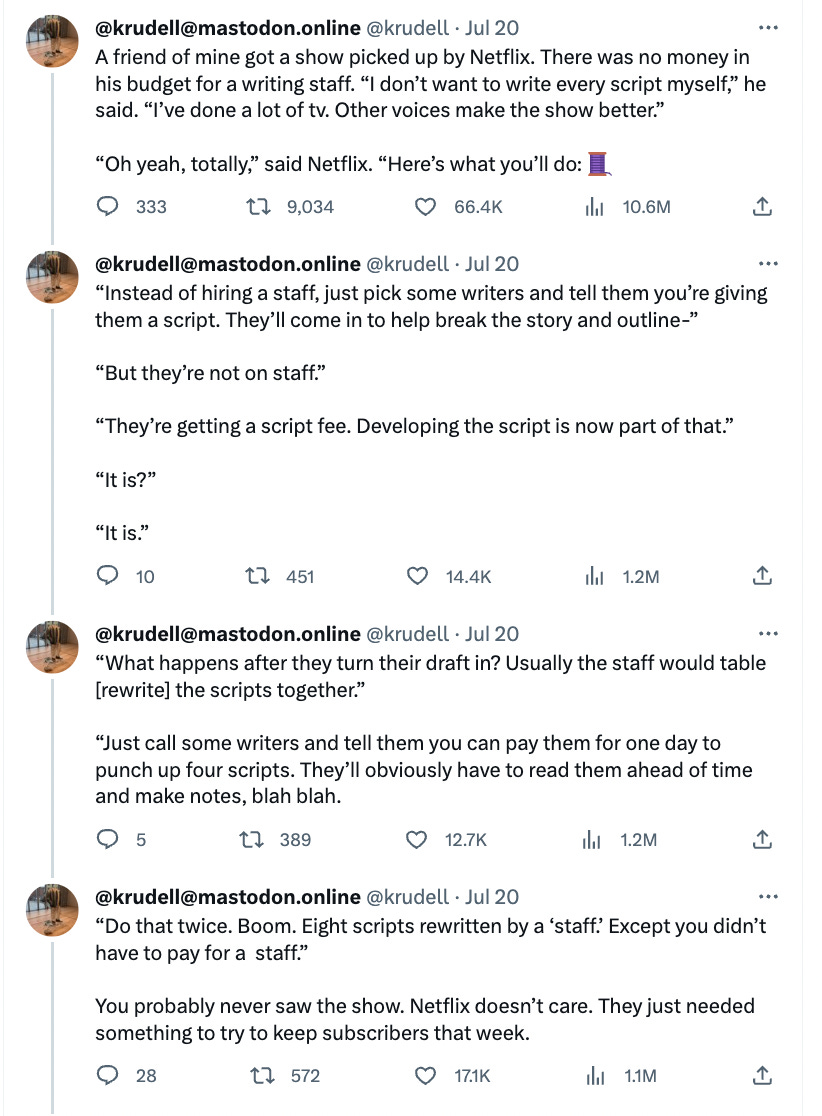

A good place to begin is this thread from TV writer Kirk J. Rudell, which I think speaks to the various ways capital uses MBA labor misclassification tricks as part of a broader legal and linguistic propaganda regime to suppress the power of labor, and has long before their latest blunt P.R. instrument of looming “AI scabs”:

This is a popular tactic for cost-cutting suits, and something that has existed across verticals since there’s been labor law: Simply redefine or misclassify the same work as something else to avoid convention and legal protections for certain forms of labor.

The entire “gig economy” (projected market value $456 billion by 2030) is largely based on this premise. Outside of providing seamless consumer-to-third-party integration via well designed apps, companies like Uber, DoorDash, and Fiverr have no innovation besides a widespread misclassification of wage workers as independent contractors. Even conceding that the development of well-made platforms accounts for, say (very generously), half of the $456 billion valuation, that’s more or less $228 billion in valuation invented out of thin air—not by any objective technological improvement, market efficiency, or discovery of some natural resource, but by simply misclassifying Labor. It’s an ontological trick, brought about by relentless lobbying. Uber’s major cash spends in its early years went not to developing new “tech,” but to lawyers and lobbyists to challenge laws—and lobby for new ones—that deregulated taxis and weakened wage labor classification.

Such a strategy is not novel: Misclassification has long been rampant in the non-union sector of the construction industry, where the misclassification of workers as independent contractors is used to deny workers’ compensation, enable wage theft, and keep workers vulnerable to hazardous conditions. An entire multi-billion dollar industry of college football and basketball was built, for decades, on simply labeling workers “student athletes”—a distinction invented out of thin air to avoid paying injured football players.

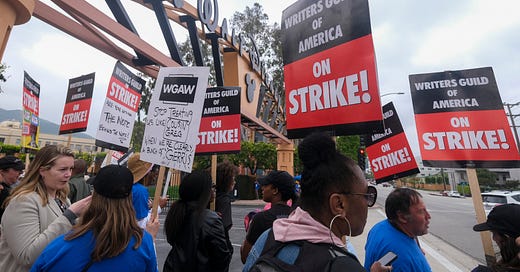

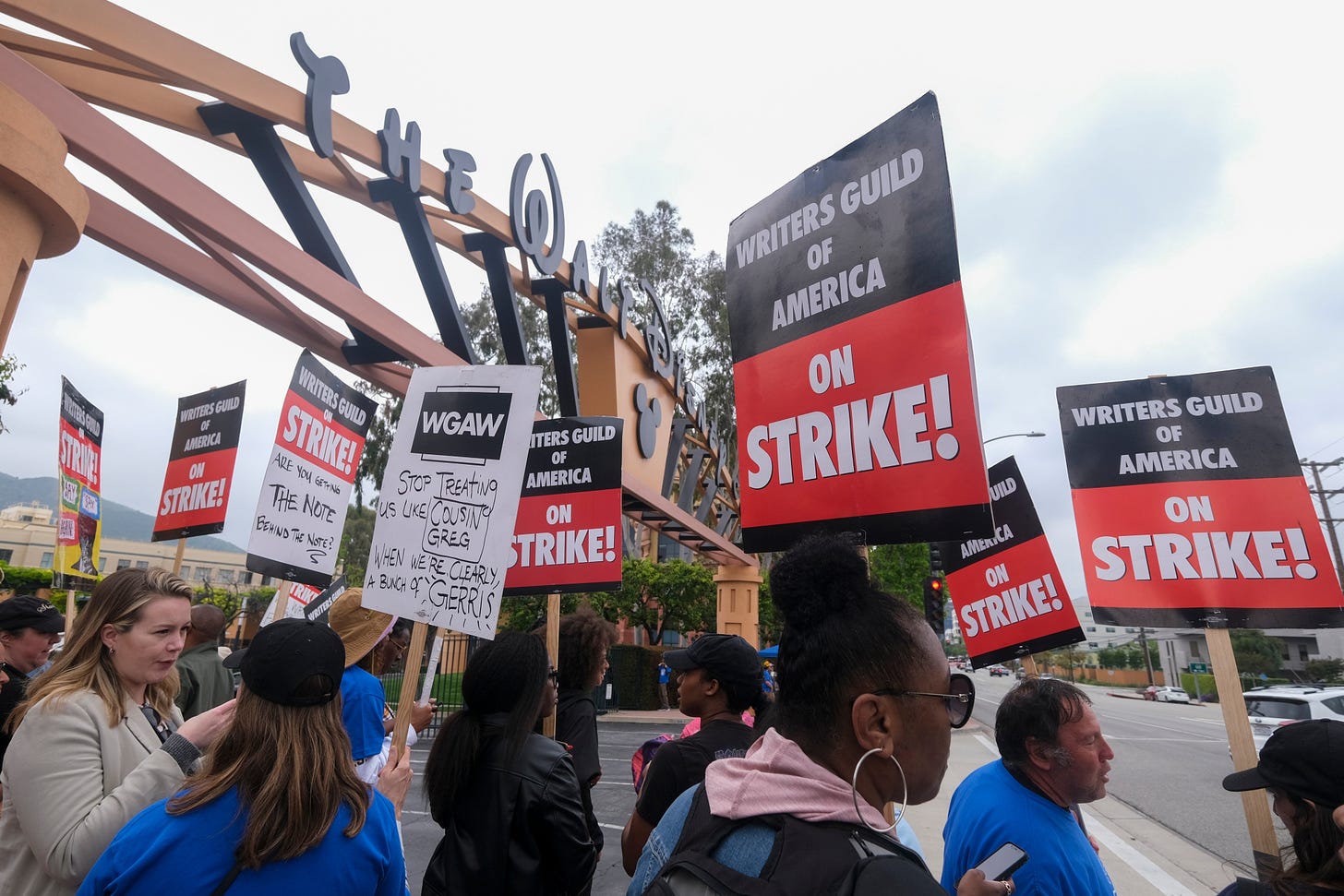

The forces eating away at TV writers for the past decade are not coming from some mystical technology—they’re coming from a move from network to streaming that allowed these companies to strip away the industry norms and exploit labor classification gray areas to further a race to the bottom. Writers, getting hip to this race to the bottom, are finally saying enough is enough and demanding a reversal of this constant, relentless erosion of traditional protections—some formalized in union contracts, some not.

And this is where I think the threat of “AI” truly rests. A common rejoinder from AI pushers who conceded chatbots cannot, as they currently exist, write a screenplay of any quality at all, is that it can do a “first draft” that the writer then comes along and rewrites. But there’s another word for re-writing: It’s called “writing”.

As it stands today, or 100 years ago, a producer can hand a writer 130 pages of random words written by a monkey with a typewriter and ask them to “re-write” it, and insist that because it’s a “re-write,” that worker should make less money. But, if it were random monkey-generated words, we would all recognize this as a transparent attempt to simply redefine labor rather than actually save any. As it stands now, anyone being handed a ChatGPT prop output and told to “rewrite” it is effectively getting this exact same treatment. The content is so stale, witless, and incoherent, much of it has to be thrown out and written from scratch. And the extent to which some can be kept this requires more labor, in the same way integrating a pile of dog shit into a work of high abstract expressionism would require extra creative innovation.

Again, none of this is to say there aren’t actual threats from emerging machine learning technology to labor that is not based on misclassification and gimmick. Reusing actors’ faces genuinely threatens actors, drab AI-generated corporate art genuinely threatens artists. These threats are real and unions should firmly stand against them rather than live in denial of their current usage by bosses and third party clients. But it’s not useful to project mystical or provincial properties onto all things with the “AI” label.

By conceding the ability of chat bots to do high-level writing, we allow corporate executives to push their real aim, which is to use the specter of this nonexistent reality to simply do what they’ve been doing now for years: try and redefine creative labor as something it’s not, to reduce TV writers and journalists to nursemaids of automated content generation when this automated content generation is effectively vomiting out Lorem ipsum. No net labor is being saved, no real value is being created. What is being created, before our eyes, is another wide-scale ontological trick, promoting a linguistic and legal regime—just like the gig economy propagandists did before them—of labor misclassification. We shouldn’t fall for it.